Powerpath Upgrade

This is actually fairly easy as far as upgrades go. If you already have a working install of some prior version of PowerPath/VE, you should not encounter any issues as long as you do everything in the right order. So let’s get started.

Solution Enabler, HBA driver/firmware, Naviagent, switch firmware, storage Ucode and ECC agents will never notice your upgrade AFAIK PowerPath is a very specific and special piece of software targetting a special and specific need. Almost every other product you mentioned will simply use powerpath if present but will never 'depend' on it. How to article type gives a customers instruction on how to do a particular solution.

This first section will be only for people who are already running, or choose to begin running, the EMC vApp virtual appliance for PowerPath licensing. You should do this, read the first item to see why, but if you don’t want to, skip to the next section:

- First and foremost, I recommend that you download and begin to use EMC’s vApp virtual appliance for managing your PowerPath licenses. Everything I’m seeing online suggests they’re ultimately going to require that so may as well get it out of the way now. It also makes the license management not too difficult although their whole license management model for PowerPath/VE in served mode still completely sucks from an ongoing maintenance perspective since you’ll lose your licenses with each ESXi host reboot (more on that later). In any case, for a new install of the vApp, you’ll want to (currently) download PowerPath_Virtual_Appliance_1.2_P01_New_Deployment.zip (LINK).

- If you already have the vApp running and it’s less than version 1.2, you’re going to need to upgrade because only 1.2 can serve licenses to PowerPath/VE 5.9. I actually had a version 1.0 appliance running so there is also a download of PowerPath_Virtual_Appliance_1.2_P01_Upgrade_only.zip (LINK) for upgrades. The upgrade is super easy; extract the ISO, stick it on a datastore your vApp has access to, map a cdrom drive to it. Next, connect via https to your vApp’s IP address on port 5480; it will want the root user/pass. Tell it to check for upgrades, it knows to look in the ‘cdrom drive’, it will find the upgrade and run it, then you just need to SSH in and reboot it and you’re done. You should be able to move along to the next section now too.

- If you are setting up your vApp for the first time and your PowerPath/VE licenses are not of the ‘served’ variety, you’ll need to get EMC to reissue them, which can be an adventure. Same goes for those of you installing PowerPath/VE for the first time along with the vApp, although your licenses may already be of the served variety. Get lucky enough to find the right person able to do this and they’ll give you a text file back with some info and a .lic extension, put it in /etc/emc/licenses/ on your vApp server and run: /opt/emc/elms/lmutil lmstat -a -c /etc/emc/licenses

- That should show the number of licenses and which ones are in use, if any, at that point.

- If you’re upgrading existing PowerPath/VE licenses, and your licenses were not of the served variety, you should register each of your vSphere hosts by using the command:

The first time you use the rpowermt command it will probably make you establish a lockbox password where it stores host credentials; don’t lose that password.

- After registering your hosts, you can check them via

- Should be good to go now.

Okay so we’ll just work on the assumption that you’ve got all your PowerPath/VE license issues worked out at this point and you’re safe to upgrade. Before we get to that though, some pre-req’s; this section will be all about pre-req’s only.

Do you use EMC’s Virtual Storage Integrator in your vSphere Client? If no, why not? It gives you all kinds of great information about your storage arrays that take forever to dig up using Unisphere. If yes, then you need to get some things up to date (and don’t skip step 4) before throwing PowerPath/VE 5.9 on your servers:

- Upgrade the base Unified Storage Management plugin itself; you want to get that up to at least version 5.6.1.18. Download is located at https://download.emc.com/downloads/DL49368_VSI_Unified_Storage_Management_5.6.zip

- Next, update the Path Management bundle which lets you set up your multipath policies all from the vSphere client. Download is located at https://download.emc.com/downloads/DL50613_VSI_Unified_Storage_Management_5.6.1.zip

- Next, update the Storage Viewer. Download that at https://download.emc.com/downloads/DL50614_VSI_Storage_Viewer_5.6.1.zip

- Unfortunately we’re not done yet. You’ll also want to update the Remote Tools bundle from 5.x to 5.9. I had the version 5.8 bundle and didn’t remember to upgrade it, so I was getting an error about “PowerPath/VE is not controlling any LUNs” when I would go to the EMC VSI tab in the client. After upgrading Remote Tools to 5.9 that issue went away: PowerPath/VE 5.9 Stand-Alone Tools Bundle

- If you use the AppSync app, there’s an update for that too; I don’t personally use it so I have no idea what it does: https://download.emc.com/downloads/DL50615_VSI_AppSync_Management_5.6.1.zip

Okay, almost done. If you have a VNX or CLARiiON, I think you’ll need the Navisphere CLI too if you’re wanting to mess with the block side of the VNX (or CLARiiON) from the vSphere client:

If your NaviCLI doesn’t seem to work right, check this page for an issue I encountered: http://www.ispcolohost.com/2013/11/21/connecting-emc-navicli-to-a-clariion-cx4/

There is also a Unisphere CLI and I believe that is specific to the VNXe; I do not know which one is appropriate.

Whew; moving on…

Time to install PowerPath/VE 5.9 itself. I assume you’ve already downloaded it but just in case grab it (PowerPath_VE_5.9_for_VMWARE_vSphere_Install_SW.zip) from the following link if not:

All you actually need is that zip file; vSphere will take that directly. In the vSphere Client, the computer one, not the web one (since the new and improved 5.5 client can’t actually do updates, amongst its overall sucking), click to Home -> Update Manager. Select the Patch Repository tab and then “Import Patches”. Feed it the zip file you just downloaded for PowerPath/VE 5.9.

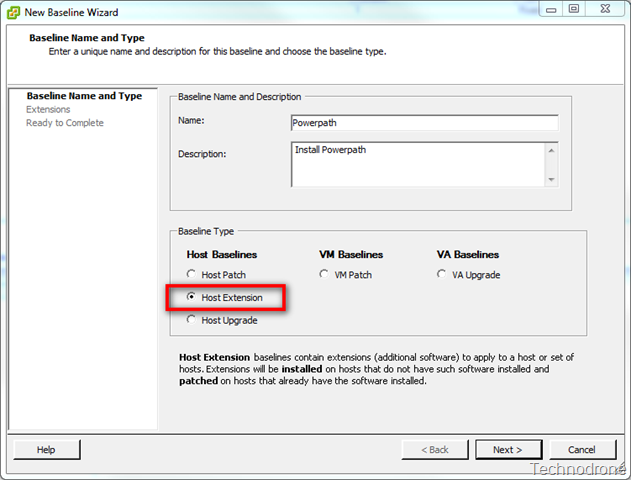

Next, select the Baselines and Groups tab. Click the “Create” link to create a new host baseline which you’ll select to be a type “Host Extension” on the first screen. Next screen, select the PowerPath/VE 5.9 patch; sort them by name and it will be easy to find. Click the down arrow to move it to the extensions to add box. Finish.

Before proceeding further, please make sure you’re up to date on patches on the vSphere side. The PowerPath/VE 5.9 release notes specify which versions of ESXi 5.9 is compatible with and you don’t want to go installing it if your version is not new enough. Here are the release notes:

You’re good to go if you’re on ESXi 5.5, ESXi 5.1 Update 1 or better (i.e. patch 838463 or higher), and ESXi 5.0 with every single available update applied. I’ve only run it on ESXi 5.5 and ESXi 5.1 with a patch level in the 900,000 to 1,xxx,000 range (didn’t look that closely since I knew I was past Update 1), so your mileage may vary on older builds. If you’re not on these, do a patch download and remediation, reboot, and get up to date before installing 5.9.

Okay, time to install. If you installed the previous PowerPath/VE using Update Manager, go back to Home -> Hosts & Clusters -> pick a host of your choice, Update Manager tab, right click on the previous attached baseline for your old PowerPath install and detach it. If you installed PowerPath/VE manually via command line or something else, then no need to worry about it since you’ll be replacing it anyway. If this is a new install, then just proceed.

Click “Attach…” and attach your new PowerPath/VE 5.9 baseline to the host. Click to remediate, let it reboot, and now PowerPath/VE 5.9 should be up and running. I have not had this happen on the 20 or so servers I’ve done an install on, fresh and upgrades, but I found a blog from someone who had PowerPath try to take over their local storage leaving ESXi with boot issues. The servers I ran this on all have a local raid controller and storage just for ESXi so not sure why it affected him and not me, but here’s a link to that if you encounter the problem:

Okay, last step is to license PowerPath/VE if this was a new install, or confirm your licenses are still working. Oh yeah, and an EMC rant is coming a bit later. If you’re not using served licenses from an EMC vApp ELMS server, well then you’re on your own. If you are using the vApp appliance, SSH into it and the steps are very simple:

- This first step is safe to do even if this was an upgrade. So, first up, for new PowerPath/VE installs, or upgrades, register each of your vSphere hosts by using the command:

The first time you use the rpowermt command it will probably make you establish a lockbox password where it stores host credentials; don’t lose that password. After registering your hosts, you can check them via

- A new option in 5.8 and 5.9 that I like to turn on is automatic restoration of paths that had been removed from use for errors. By default, PowerPath can wait quite a while to restore a path to service if it had failed. For example, busy LUN and an SFP fails, taking the link down. PowerPath immediately fails it, no big deal since it probably still had other paths or why else would you be using it? But that dead link even after repair will stay in a dead state for what can be quite a long time, days or more. Setting ‘reactive autorestore’ on will cause it to periodically test the path and return it to service after it passes a test. To do that:

where 192.0.2.1 is your ESXi host.

- Check all the paths and devices PowerPath is managing:

You can of course do this via the vSphere client now too, the regular one that is, not the horrible web version. If everything looks good, go ahead and turn lockdown mode back on on your host for security and move on to the next host. My rant below is about this final step just FYI.

My PowerPath/VE & EMC rant. So you probably run your ESXi hosts in lockdown mode for security right? And you’re probably running PowerPath/VE too or you wouldn’t be on this page to begin with. Well PowerPath, for many years now, has had an incredibly obnoxious issue that affects hosts using ‘served’ licenses. If you put the host in lock down mode, your EMC ELMS vApp license server will not be able to communicate with those hosts using the rpowermt command. That would not be a huge deal since how often do you really need to mess with the PowerPath licenses on your hosts? Just take them out of lock down mode when you need to do something.

Well not so fast, the f’ing PowerPath/VE software on the vSphere hosts tend to lose their licenses nearly every time they are reboot, therefore, if you keep up to date on your VMware patches for your ESXi installs, you’re going to be rebooting hosts somewhat regularly, which means your PowerPath licenses are going to fail somewhat regularly, which means you’re going to have to go through the huge hassle of taking every host that reboots out of lockdown, running some fucking commands on your ELMS vApp to re-license, then putting lockdown mode back on, every single time you reboot a host.

The PowerPath folks tell you well that’s not that big a deal because even in unlicensed mode PowerPath/VE has the same features so you won’t lose path redundancy or multipath I/O benefits and just fix the licenses later as needed. Well that would be great if not for the fact that the the EMC Virtual Storage Integration folks are cranking out new features by the day into the EMC VSI app for vSphere and when the licenses go to unlicensed mode, you can’t use many of the features in the app.

This has been going on for years now and they seem to be no closer to fixing it.

Upgrading PowerPath in a dual VIO server environmentWhen upgrading PowerPath in a dual Virtual I/O (VIO) server environment, the devices need to be unmapped in order to maintain the existing mapping information.

To upgrade PowerPath in a dual VIO server environment:

1. On one of the VIO servers, run lsmap -all.

This command displays the mapping between physical, logical,

and virtual devices.

$ lsmap -all

Upgrade Powerpath Linux

SVSA Physloc Client Partition ID

————— ————————————– ——————–

vhost1 U8203.E4A.10B9141-V1-C30 0×00000000

VTD vtscsi1

Status Available

LUN 0×8100000000000000

Powerpath Upgrade App

Backing device hdiskpower5

Physloc U789C.001.DQD0564-P1-C2-T1-L67

2. Log in on the same VIO server as the padmin user.

3. Unconfigure the PowerPath pseudo devices listed in step 1 by

Powerpath Upgrade Guide

running:

rmdev -dev

Powerpath Upgrade Windows

where

For example, rmdev -dev vtscsil -ucfg

The VTD status changes to Defined.

Note: Run rmdev -dev

4. Upgrade PowerPath

1. Close all applications that use PowerPath devices, and vary off all

volume groups except the root volume group (rootvg).

In a CLARiiON environment, if the Navisphere Host Agent is

running, type:

/etc/rc.agent stop

2. Optional. Run powermt save in PowerPath 4.x to save the

changes made in the configuration file.

Run powermt config.

5. Optional. Run powermt load to load the previously saved

configuration file.

When upgrading from PowerPath 4.x to PowerPath 5.3, an error

message is displayed after running powermt load, due to

differences in the PowerPath architecture. This is an expected

result and the error message can be ignored.

Even if the command succeeds in updating the saved

configuration, the following error message is displayed by

running powermt load:

host1a 5300-08-01-0819:/ #powermt load Error loading auto-restore value

Warning:Error occurred loading saved driver state from file /etc/powermt.custom

host1a 5300-08-01-0819:/ #powermt load Error loading auto-restore value

Warning:Error occurred loading saved driver state from file /etc/powermt.custom

…

Loading continues…

Error loading auto-restore value

When you upgrade from an unlicensed to a licensed version of

PowerPath, the load balancing and failover device policy is set to

bf/nr (BasicFailover/NoRedirect). You can change the policy by

using the powermt set policy command.

5. Run powermt config.

6. Log in as the padmin user and then configure the VTD

unconfigured from step 3 by running:

cfgdev -dev

Where

For example, cfgdev -dev vtscsil

The VTD status changes to Available.

Note: Run cfgdev -dev

7. Run lspath -h on all clients to verify all paths are Available.

8. Perform steps 1 through 7 on the second VIO server.